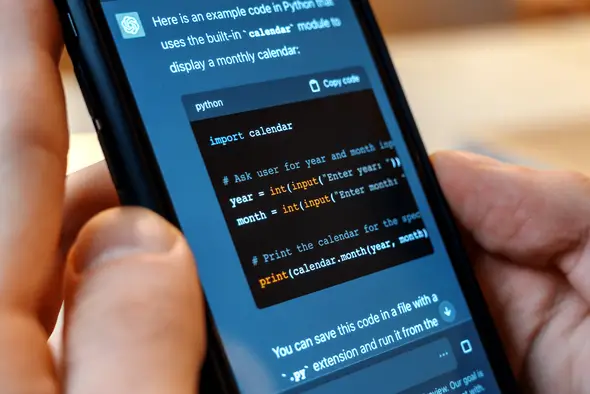

In the ever-evolving landscape of artificial intelligence, ChatGPT stands as a prominent example of cutting-edge technology. Developed by OpenAI, ChatGPT is a language model that utilizes the GPT-3.5 architecture, offering unparalleled natural language processing capabilities. As organizations and individuals integrate ChatGPT into various applications, concerns arise regarding its detectability. Can ChatGPT be distinguished from human-generated content? This article delves into the intricacies of detecting ChatGPT and explores the challenges associated with identifying this advanced conversational AI.

Understanding ChatGPT

ChatGPT is built upon the GPT-3.5 architecture, a state-of-the-art language model that has undergone extensive training on diverse datasets. It excels at understanding context, generating coherent responses, and mimicking human-like language patterns. This advanced level of sophistication has led to ChatGPT being employed in customer service, content creation, and even as a tool for brainstorming ideas. As artificial intelligence continues to advance, there is growing concern about its potential impact on various industries, particularly in how chatgpt destabilize white-collar work by automating tasks traditionally performed by humans.

Detection Challenges

The detection of ChatGPT poses a considerable challenge due to its ability to produce contextually relevant and coherent responses. Traditional methods of identifying AI-generated content often rely on patterns, inconsistencies, or specific markers. However, ChatGPT’s prowess in emulating human language nuances makes it elusive to conventional detection techniques.

One notable aspect of ChatGPT is its adaptability to various writing styles and tones. Unlike earlier iterations of language models, ChatGPT can seamlessly switch between formal and informal language, mimicking different personalities. This adaptability further complicates the task of distinguishing between AI-generated and human-generated content.

Machine Learning in the Detection Struggle

Ironically, the same machine learning techniques used to develop ChatGPT are now employed in attempts to detect its output. Researchers are exploring adversarial training and fine-tuning methods to create models capable of discerning between AI-generated and human-generated text. However, as ChatGPT continues to evolve, these detection models face an uphill battle in keeping pace with its advancements.

ChatGPT’s Non-Deterministic Nature

One of the fundamental challenges in detecting ChatGPT lies in its non-deterministic nature. The model’s responses are not pre-programmed; instead, they emerge from a vast array of learned patterns and information. This unpredictability makes it challenging to establish a set of rules or markers for identification. Unlike rule-based systems, ChatGPT’s responses are context-dependent and can vary even for similar inputs.

The Role of Context and Ambiguity

Context plays a pivotal role in the effectiveness of ChatGPT, as the model relies heavily on understanding the context of a conversation to generate relevant responses. This contextual dependence introduces a layer of ambiguity that further complicates the detection process. In instances where responses are contextually accurate, distinguishing between human and AI-generated content becomes increasingly intricate.

Ethical Considerations

The debate surrounding the detectability of ChatGPT extends beyond technological challenges to ethical considerations. The ability to distinguish between AI and human-generated content has implications for transparency, trust, and accountability. In applications such as customer service or content creation, users have a right to know whether they are interacting with a machine or a human. Striking a balance between the benefits of AI and the ethical imperative of transparency remains a critical challenge.

Future Prospects and Solutions

As the field of artificial intelligence continues to advance, the detection of AI-generated content, including ChatGPT, is likely to become an area of active research. Innovations in machine learning, combined with a deeper understanding of the intricacies of language models, may pave the way for more effective detection methods.

One potential avenue for improvement is the development of hybrid models that combine rule-based approaches with machine learning algorithms. By leveraging both deterministic rules and learned patterns, these models may enhance the accuracy of detection in the face of evolving AI capabilities.

Conclusion

The question of whether ChatGPT can be detected is a multifaceted challenge that encompasses technological, ethical, and practical considerations. As artificial intelligence continues to shape the way we interact with technology, the need for reliable detection mechanisms becomes increasingly evident. While researchers and engineers grapple with the complexities of identifying AI-generated content, the ethical dimensions of transparency and user awareness must also be at the forefront of these discussions. The journey to unravel the enigma of ChatGPT detection is ongoing, and it is likely to shape the future landscape of conversational AI.